Building Smart Workflows with Azure AI

What started as a quick experiment turned into a surprisingly powerful AI prototype.

I’ve been curious for a while now, just how frictionless has it become to stitch together Microsoft’s AI and automation offerings into something functional, fast, and valuable? To test it, I spun up a proof of concept using Copilot Studio, Azure services, and a bit of Python code.

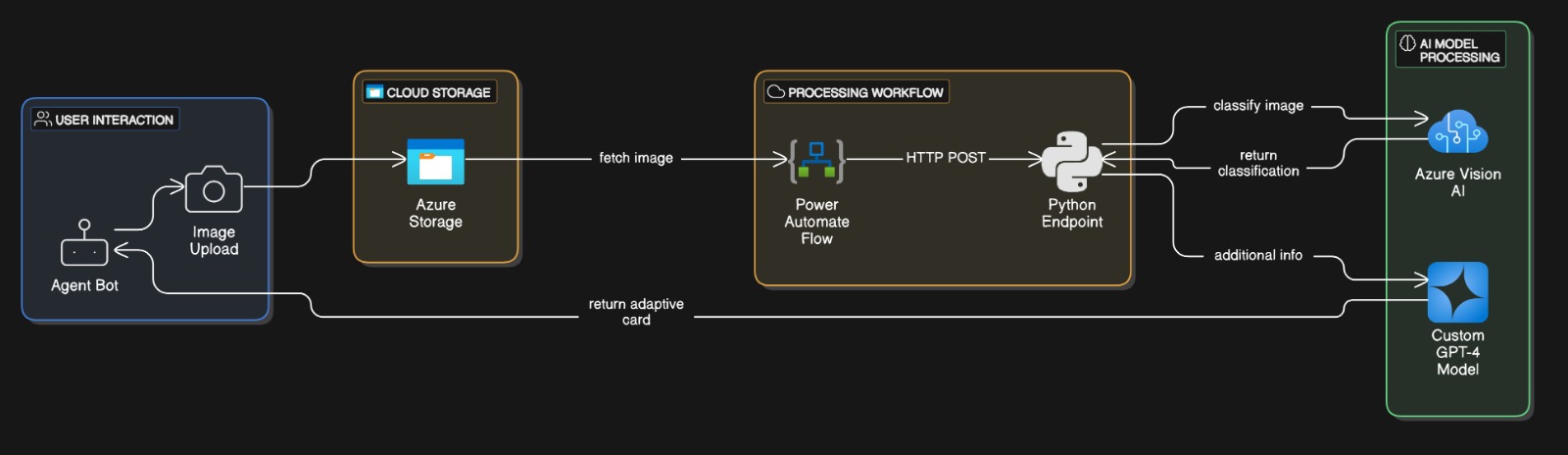

Here’s what I built: a Copilot Studio agent that interacts with a user and prompts them to upload an image. Once uploaded, the image is saved to Azure Blob Storage. From there, a Power Automate Flow fetches the image and sends it to an Azure Function App running a Python script. That script acts as the orchestrator of the system, orchestrating calls to AI services:

- Azure Vision AI – trained to classify the image.

- Custom GPT-4 Model – enriched with vendor-specific catalog data and other relevant meta data via Azure AI Foundry.

The Vision AI handles initial classification. Based on that, the GPT-4 model pulls in contextual product information, metadata, vendor-specific insights, and related links from a preloaded dataset. The final response is structured as a JSON payload and transformed into an adaptive card, which is then displayed directly to the user via the agent bot interface.

What impressed me wasn’t just how well it worked, but how fast everything came together. Blob Storage, Power Automate, Vision AI, Azure Functions, GPT-4, all the pieces clicked. Azure’s AI building blocks really have matured to a point where they feel modular.

Of course, the ease of assembly brings new challenges. Once something is working, you need to think about scalability, cost controls, and architectural decisions. Can the Python function handle high-concurrency traffic? What happens when the catalog data expands or needs real-time updates? How do I monitor prompt quality and AI performance across endpoints?

This proof of concept reminded me: the hard part isn’t always the code anymore. It’s how you shape the system around the code. The more these tools abstract complexity, the more important it becomes to design with intent.

Looking Ahead

Looking ahead, I see this concept evolving into something that truly adds value to a user’s workflow by offering intelligent, visual-first interactions that surface recommendations with real commercial impact. With further refinement, the possibilities are endless, and it all starts with something as simple as a single image upload.